Table Of Content

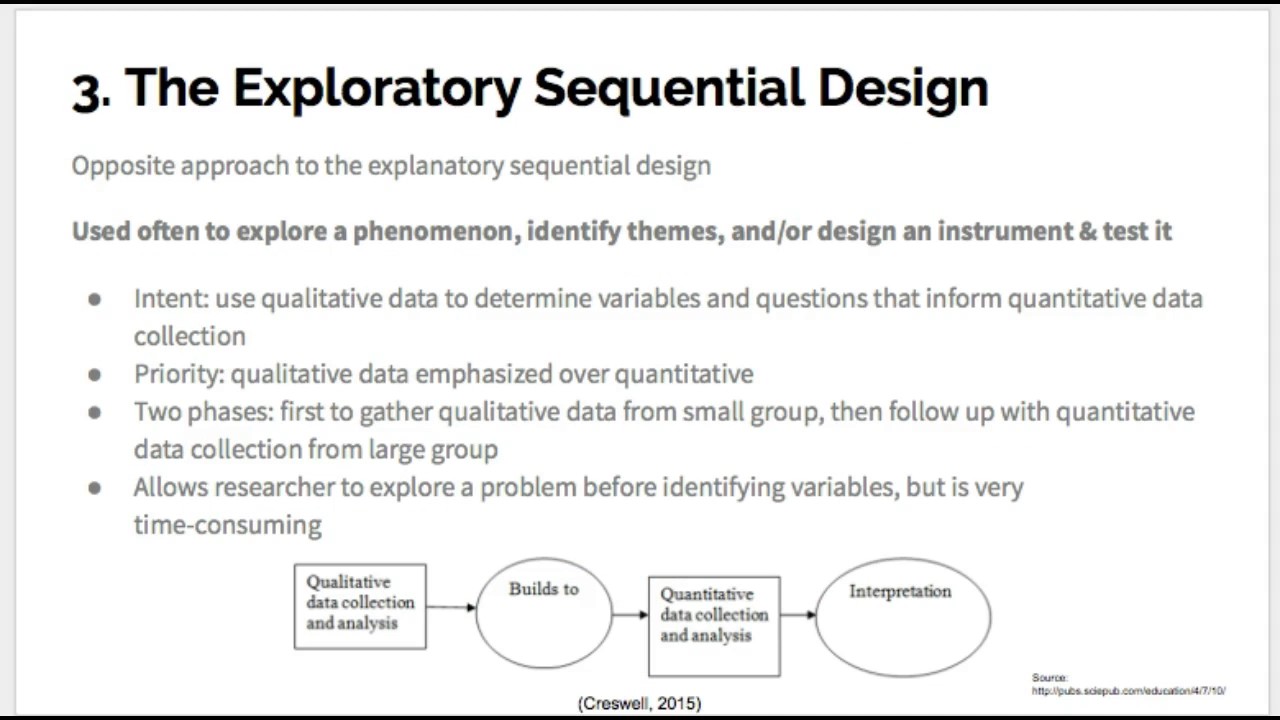

For the acceptance of the test results, the number of values was 0 to 1, with 0 representing absolute conflict and 1 representing absolute consensus. Krippendorff [44] posits that it is typical to expect an alpha value of 0.800 as an acceptable baseline, while 0.667 can be regarded as the lower reasonable threshold (L.L.) for which preliminary assumptions are permissible. In this paper, Scott’s Pi is applied without considering the confidence interval; however, a confidence interval is supposed to demonstrate how high the achieved reliability can get. Complexity, then, not only depends on the number of components, but also on the extent to which they depend on each other (e. g., “one approach affects the formulation of the other”). (4) using a framework (theoretical or program) to bind together the data sets (Creswell and Plano Clark 2011, p. 76).

How to Construct a Mixed Methods Research Design

In these designs, mixing occurs in an interactive manner at all stages of the study. At each stage, one approach affects the formulation of the other, and multiple types of implementation processes can occur. The question arises whether researchers should plan all these decisions beforehand, or whether they can make them during, and depending on the course of, the research process. On the one hand, a researcher should decide beforehand which research components to include in the design, such that the conclusion that will be drawn will be robust. On the other hand, developments during research execution will sometimes prompt the researcher to decide to add additional components.

Similar content being viewed by others

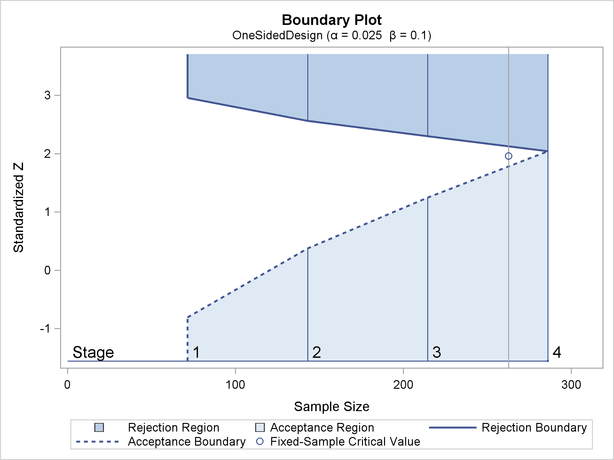

Explanatory Sequential Design - Two phase design where quantitative data is collected and analyzed first, then qualitative data is collected and analyzed based on the quantitative results. Convergent Mixed Methods Design - One phase design where both quantitative and qualitative data collected and analyzed, then compared the analysis of quantitative and qualitative data to see if the data confirms or disconfirms each other. The idea of group sequential designs obviously is to come up with bounds that lead to overall 5% type I error.

Future Developments or Limitations

Simultaneity indicates whether data collection is done concurrent or sequentially. Dependence indicates whether the implementation of one component depends upon the results of data analysis of the other component. As we will see in the example case studies, a concurrent design could include dependent data analysis, and a sequential design could include independent data analysis.

Learning Outcomes

As a result of the survey, a quantifiable framework for assessing senior leadership commitment to H&S in construction work was established to help with future research. The primary design dimensions explained above have been the focus of this article. There are a number of secondary considerations for researchers to also think about when they design their studies (Johnson and Christensen 2017). Now we list some secondary design issues and questions that should be thoughtfully considered during the construction of a strong mixed methods research design. Teddlie and Tashakkori’s (2009) multilevel mixed designs and fully integrated mixed designs are both complex designs, but for different reasons. A multilevel mixed design is more complex ontologically, because it involves multiple levels of reality.

Cross-Sequential Research

The authors wish to acknowledge the University of Johannesburg for the resources used to conduct this study (SPSS and AtlasTi). To ensure clarity in the keynote and the spheres of the enquiry and assessment, Arksey and Knight [35] support the idea of two interviewers; however, this view is refuted by Whiting [36], who posits that the use of a single interview conductor is sufficient. Whiting [36] substantiates this assertion by further providing robust interview guidelines.

4. PHASE 4: Predictive Modeling

Perhaps the simplest approach is to design is to look at a single book and select one from the few designs included in that book. Here we have shown that one often needs to construct a research design to fit one’s unique research situation and questions. Much more important, however, than a design name is for the author to provide an accurate description of what was done in the research study, so the reader will know exactly how the study was conducted. The overall goal of mixed methods research, of combining qualitative and quantitative research components, is to expand and strengthen a study’s conclusions and, therefore, contribute to the published literature. In all studies, the use of mixed methods should contribute to answering one’s research questions. The Sequential Predictive Research Design (DISPRE) is a techno-methodological strategy to address complex research problems in the social sciences, which envisages the necessary integration to determine qualitative and quantitative variables’ behaviour.

This could be a factor in why our hypothetical research found a more optimistic picture of intelligence and aging as the years went by. At each time of testing, they could randomly recruit more participants from the same cohort as the original members, to replace those who have dropped out. Of course, the researcher’s ethical component will determine the quality of the study’s content. And because almost every science has someone who cleverly combines things, we have a sequential design, also sometimes referred to as a cross-sequential design, which is defined as a combination of longitudinal and cross-sectional designs, by following several differently aged cohorts over time.

11. Survey Data Processing Approach

Following normality tests, which were comprised principally of the degree of Skewness and Multivariate Kurtosis as guiding descriptors, correlation and regression of the model variables were applied. In this regard, Mathison (1988) recommends determining whether deviating results shown by the data can be explained by knowledge about the research and/or knowledge of the social world. Differences between results from different data sources could also be the result of properties of the methods involved, rather than reflect differences in reality (Yanchar and Williams 2006). In general, the conclusions of the individual components can be subjected to an inference quality audit (Teddlie and Tashakkori 2009), in which the researcher investigates the strength of each of the divergent conclusions. We recommend that researchers first determine whether there is “real” divergence, according to the strategies mentioned in the last paragraph.

Sequential early-design guidance for residential single-family buildings using a probabilistic metamodel of energy ... - ScienceDirect.com

Sequential early-design guidance for residential single-family buildings using a probabilistic metamodel of energy ....

Posted: Sun, 01 Jan 2017 08:00:00 GMT [source]

The three-stage sampling plan with its two interim analyses introduces somewhat greater reduction in power, but it compensates with greater reduction in expected sample size. Either interim-analysis strategy is more efficient than a single end-of-study analysis in terms of power per unit of sample size. Consequently, the social sciences dedicated to studying the complexity of organisations have been undergoing substantial changes in the use of methodologies for the generation of scientific knowledge and support for decision-making. Other studies by Fetters and Molina-Azorin [25] found that articles on mixed methods applied to the social sciences, especially in education, were more cited than those that did not use them.

Hypothesis testing is the last step in the model analysis, and it is performed as a critical step to test if the defined and refined study hypothesis still holds or should be rejected. A generalised, structured component analysis model, such as the non-parametric model, may be used to meet model fit requirements, according to Cho et al. [71]. While model fit refers to how well a model fits the data, rather than how well the model’s variables correlate, reliability relates to how well a model matches the data. Since each model fit must statistically fulfil specific criteria before being labelled a data fit, the criterion must be defined before data collection [71]. Louangrath [67] proposed a set of interconnected tests for determining reliability in non-parametric data, including raw reliability estimates, Monte Carlo simulation and N.K.

Many bodies recommend that a sample planning procedure, such as traditional NHST a priori power analysis, is conducted during the planning stages of a study. Power analysis allows the researcher to estimate how many participants are required in order to detect a minimally meaningful effect size at a specific level of power and Type I error rate. In this study, we explore how these three theoretical issues are reflected in applied psychological research in order to better understand whether these issues are concerns in practice. To investigate how power analysis is currently used, this study reviewed the reporting of 443 power analyses in high impact psychology journals in 2016 and 2017.

That is, instead of doing a study over many years, we can complete a research study in a short amount of time. Once the study is complete, the subjects go off on their merry way and never have to come back later. This allows you to maintain high ecological validity, because your study looks a lot like the population you're interested in studying. Most importantly of all, we need to have ecological validity, which is defined as the level to which we can apply findings to real-world situations. Meaning, if I say people will always do better on their tests and pay attention to their lessons, but I have to wrap a live electrode around their thumb to keep their attention, then this would be said to have low ecological validity.

Sequential Trigon-6 review - MusicRadar

Sequential Trigon-6 review.

Posted: Thu, 13 Apr 2023 07:00:00 GMT [source]

In addition, researchers argue that this tool must fulfil stringent validity criteria, such as reliability and responsiveness, to be regarded as a robust measuring device. During the sample period, survey questionnaires were the primary means of collecting data. Participants in the study were asked to complete a survey in which the test variables and research topics were addressed [40].

Two ways that researchers collect data can be illustrated by comparing cross-sectional vs. longitudinal studies. This reinforces the assertion by Cresswell [3] and Edmonds and Kennedy [1] of the benefits offered by this type of approach. To extract relevant assumptions, QUAN analysis involves the statistical data analysis of many sample examples, while QUAL analysis relies on chosen semi-representative cases or descriptive representations in metanalyses [23]. The data analysis of the QUAL data was based on the inferential qualitative content analysis described by Mayring [53] and followed the coding procedure described by Adu [49] in this paper. Compiling interview transcripts is a normal first step in qualitative content research, according to Erlingsson and Brysiewicz [70] and Adu [49]. The qualitative content analysis aims to organise and summarise large amounts of material [2].

No comments:

Post a Comment